STOP 1 AI Assistant

Designing Scalable, Trustworthy AI Support for University Student Services

The challenge was to design an AI-powered student services agent that could meaningfully reduce friction and response times for thousands of time-sensitive enquiries, while operating within the complexity of university systems, policies, and privacy constraints. The solution needed to deliver accurate, empathetic, and accessible support across a wide range of student needs, many of them high-stress or sensitive, without overstepping institutional boundaries or undermining trust in human services. At the same time, the agent had to responsibly recognise its limits, clearly escalate complex or high-risk situations to staff, and complement rather than replace existing support structures, ultimately reimagining how students access help in a scalable, equitable, and trustworthy way.

My Role

Design Process

The design process began with extensive user research to understand students’ needs, behaviours, language, and pain points. We conducted interviews, co-design sessions, and developed detailed personas, capturing diverse perspectives including those with varying accessibility requirements. Mapping journey flows, user flows, and access point maps in Miro allowed us to visualise how students discover and interact with support services, revealing friction points, seasonal pressures, and key opportunities for timely intervention. Complementary research; analysing support tickets, emails, and call logs in Excel; highlighted recurring gaps and high-volume enquiries, while design precedent studies, conversational design reviews, response time analysis, naming research, and minor iterative improvement research in Google Docs and Pinterest guided interaction patterns, terminology, and interface cues.

Improved Student Support at Scale

The project resulted in a human centred AI agent that significantly improved accessibility, efficiency, and trust in student services. Previously, students often fell through the cracks because support staff were overwhelmed with high volumes of non-urgent enquiries, leaving limited capacity to address critical or time-sensitive cases. Many students could not access guidance outside office hours, and urgent needs sometimes went unnoticed. The AI agent addressed this by providing 24/7 access to accurate, reliable support for common enquiries, simplifying complex institutional information into clear, actionable steps, and ensuring students with urgent needs were escalated to human advisors without delay. Staff were able to focus on high priority cases, improving triage quality and reducing the risk of important enquiries being missed. The system also built trust through transparent behaviour, policy aligned responses, and responsible handover to human support, demonstrating how AI can enhance rather than replace human services at scale.

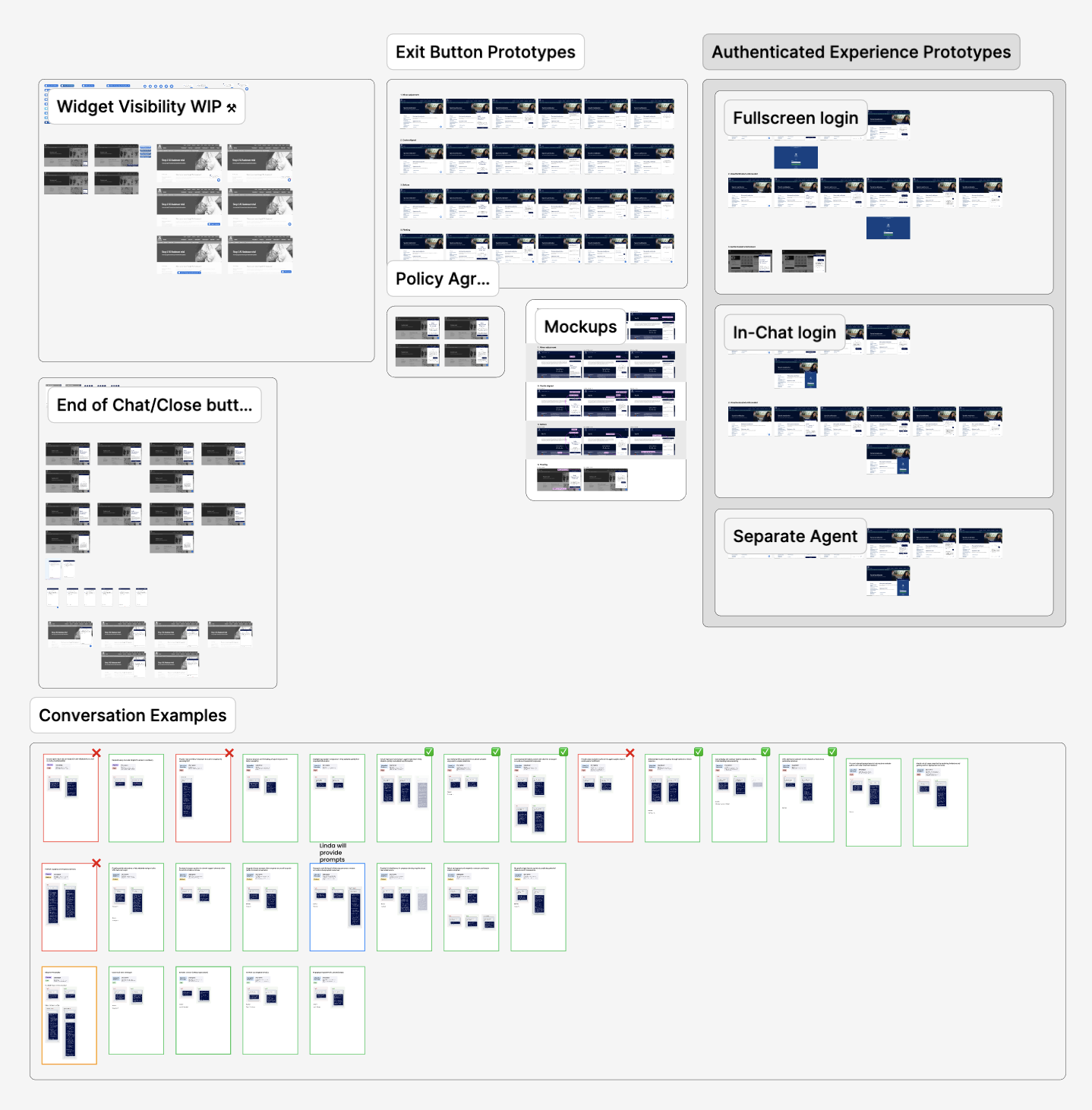

Furthur examples here

.png)