Connecting students for shared trips between campus and surrounding suburbs

Project overview

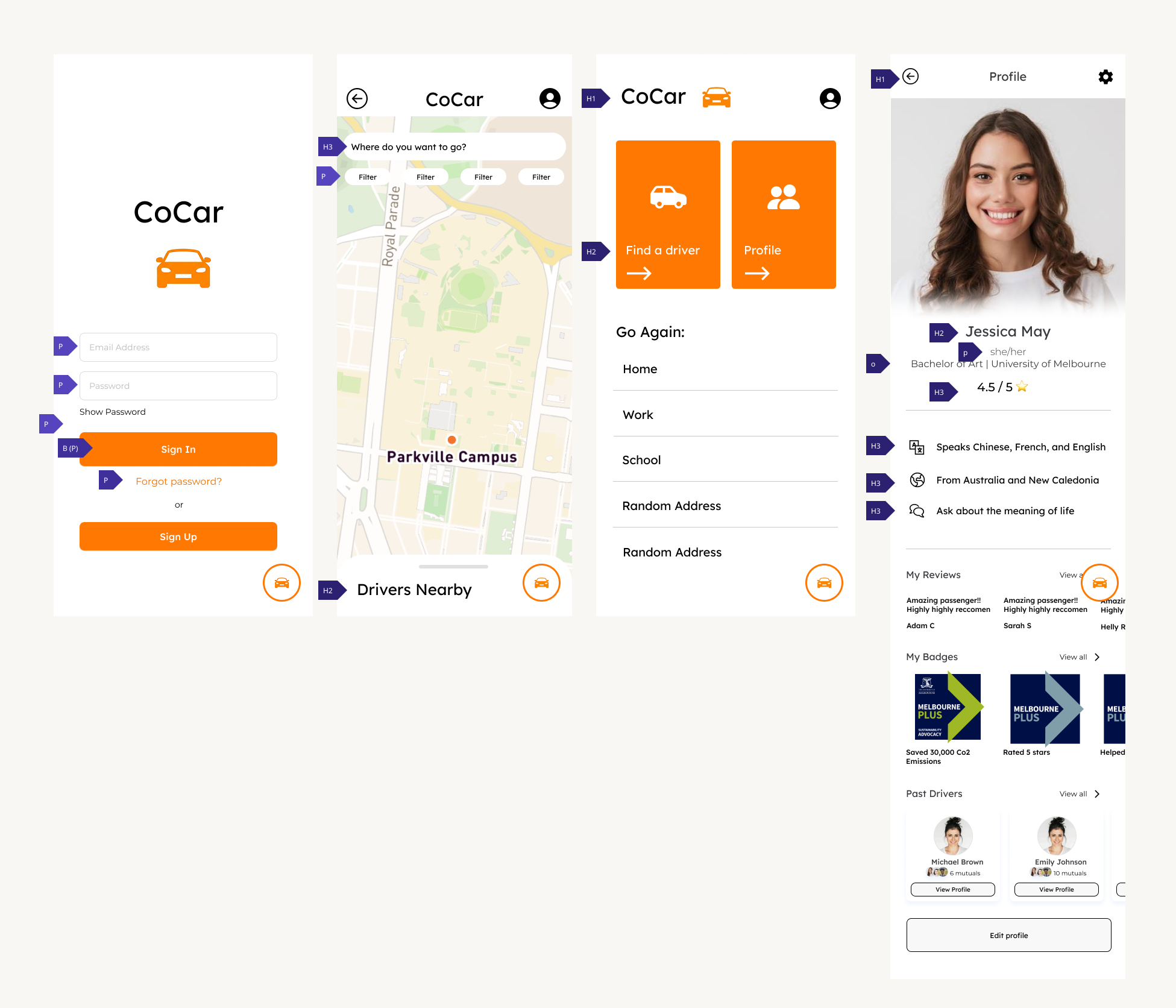

CoCar is a ride-sharing platform designed specifically for university students seeking more accessible, affordable, and social ways to commute. Our project centred on designing "Coco," a friendly and inclusive chatbot that helps users register, estimate ride costs, find compatible student drivers, and get support. The aim was to replace clunky form-based flows with natural, contextual, and responsive interactions. Coco was developed through a rigorous, user-centred, iterative design process that prioritised accessibility and conversational UX. Our work spanned across Figma wireframes, Voiceflow prototypes, Wizard of Oz testing, accessibility research, A/B testing, and chatbot personality crafting.

My Role

Design Process

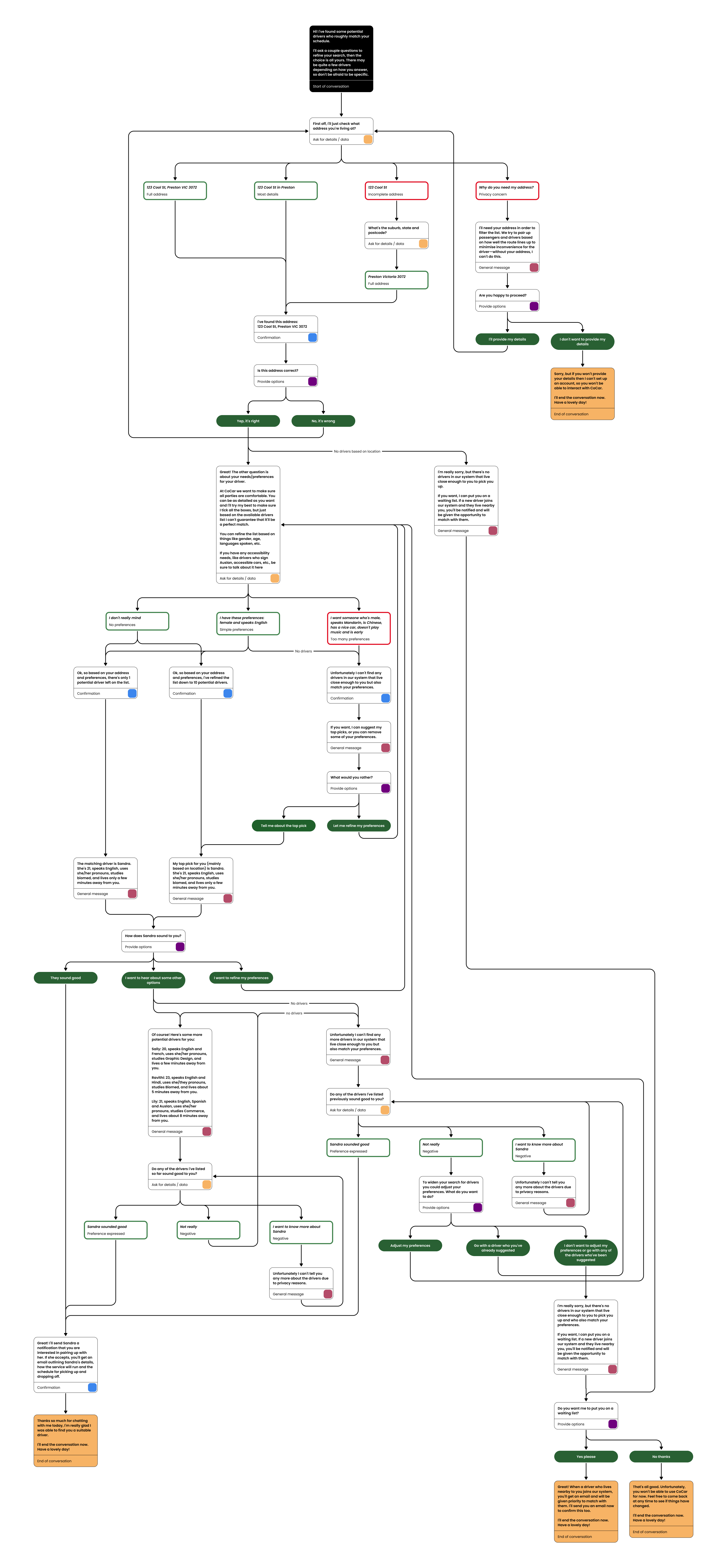

The design process began with extensive ideation and research to deeply understand student needs, behaviours, and accessibility requirements. Using 10+10 sketching, HMW framing, and priority matrices, we explored dozens of ideas and mapped them against user journeys, focusing on core chatbot functionalities such as registration, fare estimation, driver matching, and FAQs. Secondary research into student transport behaviours, accessibility needs, and conversational design best practices, alongside real-world data from the University of Melbourne, PWDA, and NDIS, informed personas representing diverse users, including those with mobility impairments, vision limitations, and sensory needs. Early prototyping involved low-fidelity Figma flow diagrams, storyboards, and physical walkthroughs, progressing to high-fidelity conversation flows in Figma and semi-functional Voiceflow prototypes to test logic, tone, and usability. Attention to accessibility included WCAG AAA colour contrast, responsive layouts, screen reader compatibility, and button-based interactions to reduce typing and cognitive load.

Product

The final chatbot was successfully launched as a modular, intent-based system, fully integrated with Voiceflow and supplemented with real-time APIs and JavaScript logic. Coco supported key user tasks: driver matching, registration, cost estimation, and FAQs with flexible flows that could handle mid-conversation topic changes. Inclusivity was central to the design from the start: interactions favoured buttons over free text to assist users with mobility impairments, layouts were responsive with readable fonts and WCAG AAA colour contrast, and the system supported screen readers, keyboard navigation, and voice-to-voice interactions for blind or low-vision users. Fallback flows and progressive prompts were built in to reduce cognitive load demonstrating both technical robustness and strong accessibility consideration.

Furthur examples here

.png)